Auspex: Ecosystemic Emergence as Generative Soundscape

Mike Cassidy / Kristian North

Department of Music, Concordia University, Canada

me[at]mikecassidy.info / kristian.north[at]gmail.com

http://www.mikecassidy.io / http://www.kristiannorth.info

Abstract

This paper presents an overview of Auspex, an agent-based artificial life ecosystem generating multichannel soundscapes from corpus-based concatenative synthesis techniques aligned with the acoustic niche hypothesis. Insights into its development are derived from the review of two prototypical systems; BoidGran, a boid-driven granular synthesizer, and Swarmscape, an audio-visual performance system that emphasizes the acoustic emergence of swarming bodies. Analysis includes conceptual approaches in the contextualization of the system’s output.

Keywords

Soundscape composition, acoustic ecology, swarm dynamics, concatenative synthesis, artificial life, acoustic niche hypothesis, FluCoMa.

DOI https://doi.org/10.23277/emille.2023.21.001

오스펙스(복점관): 생성하는 소리풍경로서 생태계적 출현

마이크 캐시디 / 크리스찬 노스

콩코디아 대학교 음악대학, 캐나다

me[at]mikecassidy.info / kristian.north[at]gmail.com

http://www.mikecassidy.io / http://www.kristiannorth.info

초록

이 글은 음향 틈새 가설에 맞추어 구축된 코퍼스(말뭉치) 기반 연결 합성 기술을 통해 다중 채널 사운드스케이프(소리풍경)를 생성하는 에이전트(행위자) 기반 인공 생명 생태계인 오스펙스(복점관)에 대하여 개괄적으로 보여준다. 이것을 개발하게 된 것은 두 가지 프로토타입 시스템을 검토함으로써 파악하게 되었다; 보이드 구동boid-driven(한 단위별로 작동케 하여 큰 무리를 이루는) 그래뉼러 신디사이저인 보이드그란BoidGran과 떼지어 움직이는 생명체를 음향적으로 표현하는 데 주안점을 둔 시청각 퍼포먼스 시스템, 스웜스케이프(떼풍경)Swarmscape가 그 둘이다. 이들을 분석하는데 시스템의 결과를 맥락화하는 개념적인 접근 방식이 포함되었다.

주제어

사운드스케이프 작곡, 음향 생태학, 스웜 역학, 연결 합성, 인공 생명체, 음향 틈새 가설, 플루코마.

Anyone who has heard the sounds of a natural habitat has spent a moment listening to it as music. Yet contrary to Western notions of musical creation, environmental music emerges with no composer, conductor, or leadership of any kind. The self-organisation of an ecosystem’s acoustic behaviour is most clearly described by Bernie Krause’s Acoustic Niche Hypothesis, whereby a species evolves to emit sounds in a niche not occupied by other species in the biome (Krause 1993). This spectral autopoiesis shares similarly emergent qualities to position-based behaviours like flocking and swarming. In Ancient Rome, an augur would interpret bird flocking as deterministic omens, referred to as “taking the auspices” or Auspex. Today, the proliferation of machine learning technologies has ushered in new possibilities for interpreting complex data. Within the realm of acoustics, sounds can be organised by spectral and timbral descriptors to assemble a latent data space known as the corpus. How then could a corpus space of acoustic information self-organise in accordance with acoustic and behavioural considerations, and could this emergent structuring be considered music? In recent years, Barry Truax, one of the pioneers of soundscape composition, has expanded the definition of soundscape by interchangeably entitling it context-based composition, whereby the context of a created sonic environment is served by the intention of its composition (Truax 2018). In our endeavour to creatively integrate these phenomena within an electroacoustic composition, we have developed a generative soundscape system, Auspex. What follows is a technical overview and a conceptual analysis which asks: what context has been created at the convergence of acoustic and behavioural emergence?

Acoustic and Behavioural Emergence

By definition, a non-centralized system cannot be studied in isolated parts. Instead, it is the self-organisation of its parts that creates the emergent phenomena that characterize its function. Perhaps most plainly, emergence is a macro-structural consequence of micro-interactions between agents, who may do so without awareness of the developing structure. Music can be considered the emergent phenomenon of organised sound. By extension, soundscape composition can be considered as recontextualizing ‘the perception of sound as it pertains to a necessary epistemological shift in the human relationship to our physical environment’ (Dunn 1998: 3).

A classic example of emergence in natural systems is swarming. A swarm is a meso/macro structure that forms cohesive patterns based on laws of attraction between agents. Swarming combines regular and chaotic properties of self-organisation to create a collective behaviour of entities which exhibits a singular identity. Typically, a swarm does not follow central coordination, yet it possesses behavioural abilities which can qualify as unified cognitive functioning. This “swarm intelligence” has been observed in nature and simulated in computational models for problem-solving in optimization tasks. In 1986, Craig Reynolds developed an algorithm for simulating emergent swarming/flocking behaviour which followed only three rules: cohesion, separation, and alignment of an agent’s position in relation to the central mass of its neighbours (Reynolds 1987). Granular synthesis exemplifies a phenomenon of acoustic emergence that could be classified under swarm dynamics. The sub-sectioning of a waveform into micro-sound (<50ms) grains and their subsequent stochastic rearrangement unlocks an interaction of the local waveforms with “meso-scale time patterns characterized by emergent properties, which are not present in either global or local parameters” (Keller/ Truax 1998: 4; Truax 1994). Therefore, it is no coincidence that the implementation of self-organising principles in synthesis has proven especially effective at recreating naturally occurring stochastic sounds, like those made by a streaming river or a crackling fire.

Swarm simulations have seen extensive popularity in digital media applications, offering the ability to decouple low-dimensional parametric input from higher-dimensional target domains, with applications found in synthesis and sound spatialization systems (Schacher/ Bisig/ Kocher 2014). Tim Blackwell and Michael Young have made analogies between swarming bodies and an ensemble of musicians, where temporal structures may only be perceivable at a distance (Blackwell/ Young 2004). But where their Swarm Music uses the gesture of swarming behaviour, Auspex extends to characterize these agents and immerse the listener within their environment.

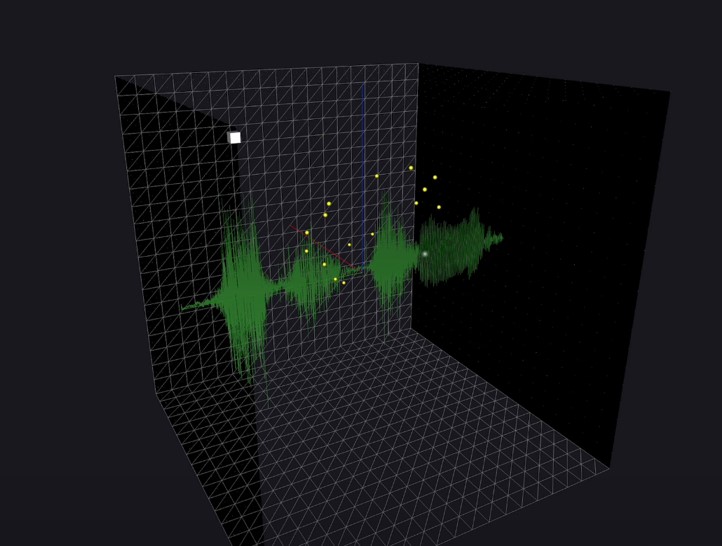

Figure 1.

BoidGran a 16-voice granular synthesizer controlled by the boid flocking algorithm

Sound 1.

Excerpt of BoidGran performing a flocking gesture

Prototypes

BoidGran

What can now be considered the initial prototype of this work was not designed as a composition but as an instrument, a boid-driven granular synthesizer written in Max/MSP, BoidGran. 16 particles in a 3D-vector space were mapped to 16 voices of a granular synthesis engine. The traditional granular controls of grain play-position, rate, and duration, along with multichannel spatialization controls, were mapped to the positions of each particle. The particles' positions were governed by the boids algorithm to enable flocking behaviour. This instrument was capable of expressive and organic control over spectromorphological models of particle-based gestures, outputting ecologically informed particle synthesis similar in spirit to Keller-Truax’s model of environmental granular synthesis, and other boid-controlled granular systems. (Smalley 1997; Keller/ Truax 1998; Blackwell/ Young 2004; Bisig/ Neukom/ Flury 2008)

Swarmscape

The original objective of the audio-visual composition Swarmscape was the implementation of BoidGran in a larger, compositional context. We set out to develop a system that could generate music without human intervention or leadership, mimicking the self-organising behaviours of a swarm in nature. Initial efforts involved stochastic conducting of parametric controls dictating compositional movements based on degrees of attraction, cohesion, and separation. This produced compelling sonic gestures, yet efforts to create more complex music were limited by the system’s reliance on positional control for synthesis without any consideration of higher “state-based” parameters of individual boids within the swarm. To create a more nuanced compositional complexity, it became necessary to extend the behaviours of the boids to inhabit the cybernetic dynamics of an ecosystem. Rather than depending on absolute cartesian position as the basis for parameter modulation, an ecosystem provides an ecologically grounded model which emphasizes an agent’s behavioural state as its primary dynamic force.

Establishing an ecosystem begins by introducing a variety of species, which sets in motion a complex web of interactions—attraction, avoidance, and competition for resources. These interactions drive the life cycles of birth, death, and reproduction, reflecting the population dynamics governed by the Lotka-Volterra equations that dictate the ecosystem's phase transitions. With the established intention of classifying 48 total agents within 3 species, an additional methodology was needed to distinguish these agents as both individuals and species from a synthesis perspective. The natural step was to assign each agent its own waveform, enabling dynamic buffer swapping. This is where the analysis of input source material could be augmented by machine learning and concatenative synthesis.

Concatenative Synthesis, also known as audio mosaicing, is a data-driven sound synthesis technique that assembles sound samples in series based on timbral analysis (Schwarz 2007; Zils/ Pachet 2001). The Fluid Corpus Manipulation Toolkit (FluCoMa), created by the University of Huddersfield, provides an extensive assortment of data analysis processes packaged to enable real-time analysis of sound information. FluCoMa allows for the dynamic creation of a corpus of sound units that can be directly used for concatenative synthesis. The FluCoMa toolkit also includes packages for dimension reduction of parametric control, providing a linear control of the higher-dimensional parameters of the boids simulation. Finally, the state-based and procedural rendering needs of the system dictated the transition from Max/MSP to a combination of Supercollider for FluCoMa analysis and audio synthesis, and Unity 3D for ecosystem simulation. Bi-directional communication between applications was made using the Open Sound Control (OSC) network protocol.

Composition Example

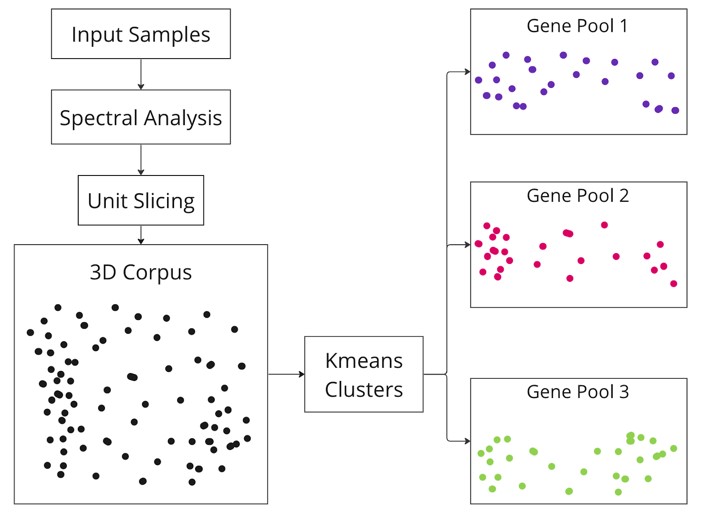

Upon starting the simulation, a folder of audio source material is analysed and sliced into subsections based on novelty, then arranged as units in a 3-dimensional corpus space based on similarity in timbral qualities. Next, units are partitioned into K-Means clusters representing three distinct gene pools from which 3 unique species will derive their sonic genome. With data analysis complete, 3 sample units from each gene pool are randomly selected and set as source waveforms for 3 spawned agents.

Figure 2.

The analysis and arrangement of input samples into a corpus using the FluCoMa Toolkit

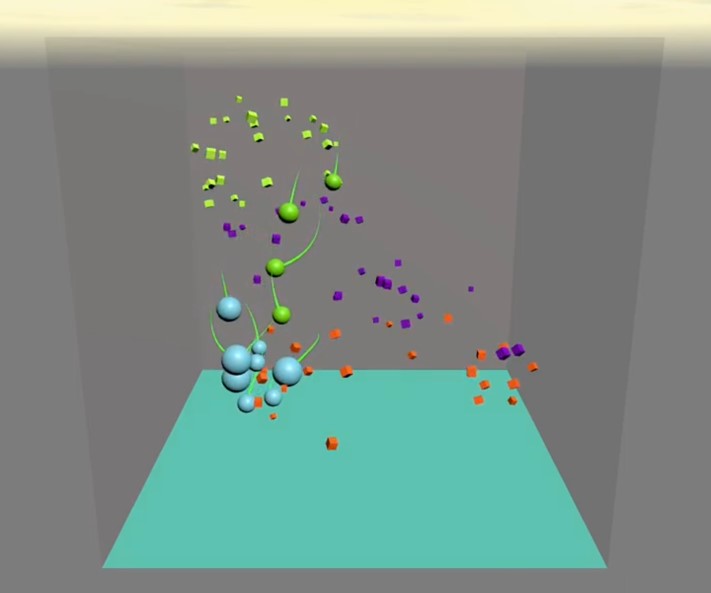

These agents now occupy and engage with the environment, each with a natural predator and prey which they respectively avoid and seek. Positioning near a predator decreases an agent’s health, and positioning near prey increases it. If health surpasses a specified threshold, a new agent is born with a waveform loaded from its special cluster, and the mother agent’s health is halved. The age of each unit increases in alignment with an in-world time system, and an agent dies if its health either reaches zero or its age surpasses a maximum limit. With the birth and death lifecycle of agents, a species can either reach a population limit of 16 or go extinct once the population reaches 0. Population increase across this spectrum contributes to increasingly coherent swarming behaviour amongst agents of a species, resulting in increasingly complex and gestural sonic content. Given the triangular predator-prey relationship between the 3 species, the extinction of one species creates a resource imbalance leading to the eventual collapse of the entire ecosystem.

Each agent granulates its loaded waveform, with the parameters of this granular engine dictated by the agent’s behavioural state. Each species has its own octave range which the granular play-rate can cycle within, ensuring that its output occupies its distinct spectral niche. As an agent moves closer to its prey, its health rises, increasing its movement velocity while narrowing the durational window of granulation, further stabilizing timbral character and fundamental frequency. As the age of an agent increases, the maximum amplitude of an agent’s output correspondingly diminishes, and the starting play-point of granulation moves through the waveform. Agents also ensure that their synthesis output is enveloped at a trigger rate and phase dependent on position to other agents of their species, so that agents closer together fire more rapidly and more synchronously to one another, recalling the phase-coupled oscillations of fireflies and other insect species (Ermentrout 1991). As the agent moves around its environmental space, its cartesian coordinates are used to spatially diffuse its audio output when performed in (real world) environments with multichannel speaker arrays.

Figure 3.

The environmental display of Auspex, shown with 2 species and 3 gene pool clusters

Sound 2.

Except of an Auspex performance. Here, a monkey species dies, leaving an empty acoustic niche eventually filled by birdsong

Over time, an imbalance in the ecosystem's population across the species will lead to a corresponding imbalance in the composition. What starts as the harmonious interplay of three spectral species eventually erodes into a dominance of a single frequency bandwidth, the Darwinian victor at the finale of the Auspex. During the performance, newly recorded source material can be continuously analysed and added to the gene pools of a species to ensure an endlessly evolving timbre of eco-composition.

Taking Auguries

There is meaning in space before the meaning that signifies. Taking auguries is believing in a world without men; inaugurating is paying homage to the real as such. (Serres 1995)

Ecological psychologist James Gibson (1979) defines environmental affordance as relating to ‘the complementarity of the animal and the environment’ (p. 119). Considering environmental sound as music frames the subsequent music as a human affordance of the environment, a provision towards relational listening. Furthermore, soundscape composition, as the human interpretation of the soundscape, creates new affordances from an acoustic environment. Thus, establishing environmental sound as music relies not only on the observance of emergent structures but must include the sensorial and subjective experience of the soundscape. When compared to the prototypical systems described above, Auspex prioritizes auditory perception and phenomenological interpretation of the soundscape, moving away from system creation to explore the deeper hermeneutic processes that underlie the meaning of the composition.

As Jon Appleton (1996) notes, electroacoustic composers tend to be more interested in ‘logical construction than by intuition’ (p. 70), a troubling notion that has forced us to consider the intuitive experience of the emergent behaviours of our digital ecosystem. To emphasize a phenomenology of listening, the decision was made to remove the visual components that we felt had dominated the aural experience of Swarmscape. We began to listen to the piece with our eyes closed, asking if the experience was comparable without its visual aid. Though the behaviour of Swarmscape is observable, assisting its audience in contextualizing the composition, the inclusion of visual components deemphasizes deep listening initiatives central to the pedagogical approaches of acoustic ecology. The reliance on visual media for analysis, including the complex visual displays of virtual instruments, is central to electroacoustic practices that emphasize the physical properties of sound objects as arbitrary signals. However, the source material of the soundscape composition relies on a contextual relationship to its semantic content for the very recognition of the composition as soundscape. Therefore, by removing visual components Auspex deliberately shifts from what Barry Truax (2022) calls the ‘signal transfer model’ (p. 14) to emphasize in situ listening or listening in context.

Figure 4.

The Augury at the founding of Rome (Fontana 1573)

Michel Serres (1995) deems the augur the ‘inaugural logician’ (p. 77). History suggests that auspex played an important role in the foundation of Ancient Rome, though the use of bird diviners to translate the will of the gods purportedly predates Rome by at least a millennium. The augur begins their ritual by using their wand to outline templa in the sky; vector spaces where the auspices might be observed. With the removal of visual media, the templa of Auspex is determined in physical space by a scalable multichannel speaker array. This templa is populated with a corpus of sonic species, inviting the listener to take the auspices. Auspex uniquely pairs an agent-based artificial life ecosystem with corpus-based mapping, thereby offering a meta-contextual relationship to the acoustic niche hypothesis from a behavioural perspective. The ecosystem does not rely on environmental recordings as source material to generate ecological context, relying instead upon the eco-assembly of sound objects by frequency to establish its various species and agents. In this way, the resultant soundscape composition maintains a meta-contextual relationship to the natural soundscape from a primarily behavioural perspective.

It may be important to distinguish this output from what is commonly considered music. As David Dunn (1998) writes, rather than integrating all sonic phenomena in our cultural understanding of music, sonic art can expand human engagement with sonic phenomena beyond music ‘as a prime integrating factor in the understanding of our place within the biosphere's fabric of mind’ (p. 3). From this perspective, environmental sounds as additive aspects of human music are anthropomorphized into the human experience. Instead, soundscape and soundscape composition can be considered as recontextualizing sound to (re)connect humans with their greater environment. Thus, it is important to acknowledge the legitimacy of natural behavioural complexity as gesture, regardless of its status as music.

Conclusion

Auspex is best described as a living process. Our ongoing research into the mechanisms of behaviour have expanded our understanding of what constitutes creativity, control, and composition. We hope that by unifying naturally organised structures with naturally organised sounds, we can uncover and re-engage with patterns fundamental to the problems we face as a species at odds with our world. Ultimately, while the work involves the principles of competition applied by game theorists, it is cooperation that is its defining feature. Decentralization has not only become a dominant theme of the work, but an important aspect of our artistic collaboration. We have failed when attempting to gain control over the system or each other and have been rewarded by allowing this sometimes violent and obscene environment to thrive. Its best performances were in private, as though it did not want our role in its existence to be apotheosized. Thus, acknowledging Auspex as art or music is less important than acknowledging it as a living thing, just as perceiving the agency of individual agents is less important than acknowledging the collective consciousness of the swarming species. Auspex is an ecosystem of interdependent parts whose sentience includes not only so-called ‘living’ organisms but also their ‘abiotic’ environment, factors of which are necessary for the survival of life.

Acknowledgements. We would like to thank our professors Ricardo Dal Farra, Kevin Austin, Barry Truax, and Teresa Connors, the Jorge Tadeo Lozano University in Bogotá, and the FluCoMa team at the University of Huddersfield.

References

Appleton, Jon H. (1996). Musical Storytelling. In Contemporary Music Review 15/2: 67-71.

Bisig, D. / Neukom, M. / Flury, J. (2008). Interactive Swarm Orchestra, an Artificial Life Approach to Computer Music. In Proceedings of the International Computer Music Conference, Belfast, Ireland.

Blackwell, T. / Young, M. (2004). Self-Organised Music. In Organised Sound 9/2: 123-136.

Cassidy, M. (2021, December 1). BoidGran Demo 1 [Video]. YouTube. https://www.youtube.com/Z1TT66xKQCAs

Dunn, D. (1998). Nature, Sound Art and the Sacred. In The Book of Music and Nature (2nd ed): 95-107. Middletown: Wesleyan University Press

Ermentrout, B. (1991). An Adaptive Model for Syncrony in the Firefly Pteroptix Malaccae. In Journal of Mathematical Biology 29: 571-585

FluCoMa. (n.d.). Retrieved September 15, 2023, from https://www.FluCoMa.org

Fontana, G. (1573). The Brothers, Disputing the Founding of Rome, Consult the Augurs [Etching]. The British Museum; London.

Gibson, James J. (1979) In The Ecological Approach to Visual Perception. Boston et al: Houghton Mifflin & Co.

Keller, D. / Truax, B. (1998). Ecologically Based Granular Synthesis. International Conference on Mathematics and Computing.

Krause, B. L. (1993). The Niche Hypothesis: A Virtual Symphony of Animal Sounds, the Origin of Musical Expression and the Health of Habitats. In The Soundscape Newsletter 6: 5-10.

Reynolds, C. (1987). Flocks, Herds, and Schools: A Distributed Behavioral Model. In SIGGRAPH Computer Graphics, 21/4: 25-34.

Schacher, J. C./ Bisig, D. / Kocher, P. (2014). The Map and the Flock: Emergence in Mapping with Swarm Algorithms. In Computer Music Journal 9/3: 49-63.

Schwarz, D. (2007). Corpus-Based Concatenative Synthesis. In IEEE Signal Processing Magazine 24: 92-104. doi:10.1017/S1355771987009059

Serres, M. (1995). In The Natural Contract. University of Michigan Press.

Smalley, D. (1997). Spectromorphology: Explaining sound-shapes. In Organised Sound 2/2: 107-126.

Truax, B. (2018). Editorial: Context Based Composition. In Organised Sound 18/1: 1-2.

Truax, B. (1994). Discovering Inner Complexity: Time Shifting and Transposition with a Real-time Granulation Technique. In Computer Music Journal 18/2: 38-48.

Zils, A. / Pachet, F. (2001). Musical Mosaicing. In Digital Audio Effects(DAFx) 2: 135.

논문투고일: 2023년 09월16일

논문심사일: 2023년 10월09일

게재확정일: 2023년 11월20일